Dynamic Textures Modeling

Results

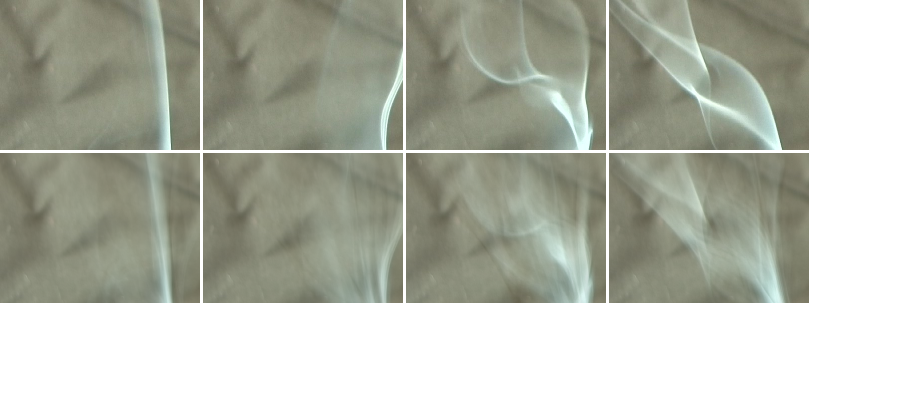

We used the dynamic texture data sets from DynTex texture database as a source of test data. Each dynamic texture from this sets is typically represented by a 250 frames long video sequence, that is equivalent to ten second long video. An analysed DT is processed frame by frame. Each frame is 400x300 RGB colour image. As a test DT were chosen: smoke, steam, streaming water, sea waves, river, candle light, close shot of moving escalator, sheet, waving flag, leaves, straws and branches. From the results can be seen that although there are some differences between original and synthesized frames the overall dynamic stayed preserved. Unfortunately it is really hard to express this similarity exactly. Robust and reliable similarity comparison between two static textures is still unsolved problem up to now. Moreover, when we switch to the dynamic textures the complexity of comparison between original and synthesized DT sequence increase even more. In some cases the synthesized DT is visually similar to the original except for less details, sometimes the moves in synthesized sequence are blurred. Less detailed appearance is mainly caused by information loss during the dimensionality reduction phase when only about 15% of the original information is saved. Main advantage of this method is its stability in the synthesis step.